Large Language Models Could Re-Decentralize the Internet

Finally, interoperability is easy.

One of my favorite ways to evaluate a new technology is to understand how it might centralize or decentralize different types of human endeavors. Large Language Models (LLMs) are poised to be a highly decentralizing force, even if they centralize computation.

Initially, I thought LLMs and other generative AI services would be a centralizing force because they continue the cloud computing trend of centralizing computation. I assumed that only a few large companies could afford the capital investment required for training powerful machine learning models, like GPT-4, which is expected to cost over $50 million.

There are indications that AI might not centralize computation as much as I initially thought. For example, projects like Stable Diffusion have shown that even generative AI computation can be decentralized. Ultimately people may be able to use generative AI on their personal devices, without relying on centralized services. Ben Thompson at Stratechery has described the AI Unbundling, including how LLMs once thought to require supercomputers can now be run on much smaller machines.

Certainly generative AI tools like DALL-E and ChatGPT will decentralize many non-computation industries by empowering individuals relative to institutions and organizations. The improved ability for individuals to quickly brainstorm, draft, and generate high-quality content will decrease the scale advantage that large content-generating institutions (software developers, law firms, marketing firms, studios, for example) have over smaller or solo firms.

Yet I believe LLMs will have an even more powerful decentralizing effect through their ability to solve interoperability issues between various user-facing software services.

The Promise of Interoperability

Interoperability allows different systems, devices, software applications, or services to communicate, exchange, and effectively use information with one another. It often requires adhering to common standards, protocols, or interfaces. This can improve user experience, streamline workflows, and reduce costs.

In the policy sphere, interoperability is frequently pointed to as a remedy to competition concerns such as “lock-in” and “monopolization.”

Cory Doctorow has described a taxonomy of interoperability, with three categories: cooperative, indifferent, and adversarial. LLMs offer new possibilities for indifferent and adversarial interoperability and will even enable a new era of automated, dynamic cooperative interoperability.

Cooperative interoperability is a common approach in software, typically through the use of Application Programming Interfaces (APIs). These APIs provide a predefined set of rules, functions, and protocols that allow developers to create software that can interact with other software. Organizations like the World Wide Web Consortium (W3C) establish standards for web-based interoperability, while industry-specific initiatives, such as the Fast Healthcare Interoperability Resources (FHIR) standard for healthcare, have emerged to address the unique challenges within various sectors.

Cooperative interoperability is difficult and time consuming because parties can have unaligned interests. Even once established, standards are necessarily brittle and static. If you modify an API to add or change functionality, you might break all the software that uses it.

LLMs like ChatGPT can facilitate indifferent and adversarial interoperability by serving as "universal APIs" between different user interfaces. User interfaces are the parts of software that we humans interact with. They employ textual and graphical languages that we can interpret - and such interfaces are part of the content that modern LLMs have been trained on.

LLMs can easily translate such user interfaces into more formal, API-like code. This capability makes it possible to create on-the-fly APIs for any service with a user interface. A web browser with LLM capabilities could, for example, interact automatically on your behalf with Facebook or Twitter through the same user interface that you would use. LLMs could even provide connection between services similar to what Zapier and IFTTT do - but unlike those services, they could work even when the software services being connected offer only a user interface, with no formal API exposed. Perhaps most usefully, if a webpage or service changes its user interface, LLMs would be able to adjust without the need for a human to re-write the API.

Redefining Computation and Communication

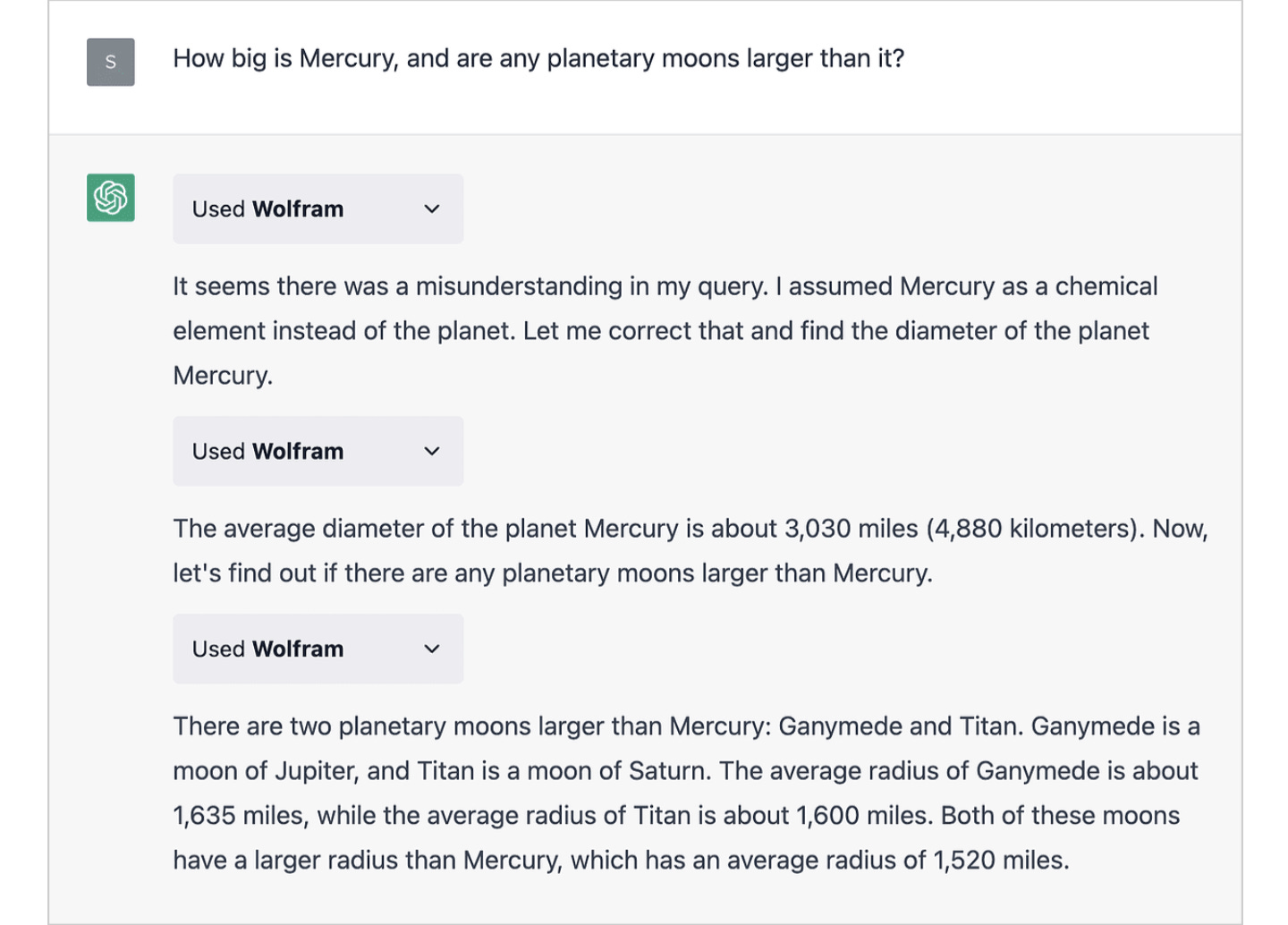

But the more “sci-fi” and exciting result to me is the future potential for automated cooperative interoperability. This future might be very near. Look at this screenshot of ChatGPT using the Wolfram Alpha plugin:

Notice how ChatGPT and Wolfram Alpha are having a conversation to get to the correct result. I am not sure whether they are doing so through a well-defined, static API or if they are passing natural language queries and responses back and forth - but it seems like the latter is plausible.

What does this ability of LLMs to engage in interoperability mean?

Natural Language as Universal API. APIs between software may become less formalized and more iterative, perhaps communicating primarily through natural language.

Automatically Negotiated APIs. Future software programs might negotiate how to communicate through an iterative and dynamic process starting at a natural language level but quickly evolving a more specific, efficient, built-to-purpose interface. This would mitigate the legacy support problem of cooperative interoperability APIs. If one service changes the interface, this would just start a new conversation to evolve an efficient API. I imagine APIs will co-evolve between two services, similar to how human twins sometimes develop their own languages through continuous communication with each other.

Adversarial Interoperability Becomes Easier. Adversarial interoperability is going to become easier to do and harder to prevent. LLMs will be able to read and interact with the same interface humans use. If a service provider attempts to break the interface for LLM use, he will probably break it for users, too.

Interoperability as a Data Source. Centrally provided LLMs offering this API-like translation services would have a bird’s eye view of the entire ecosystem of platform-to-platform interactions. Such LLMs would be well-positioned to learn general principles on how to better mediate communication between different pieces of software, evolving and become more efficient over time. Thus, even centralization of the LLM would enable enormous modularity and flexibility elsewhere.

No need for Interoperability Mandates. Advocates for interoperability often turn to regulation to overcome the business incentives and coordination costs that can prevent cooperative interoperability. To the extent that LLMs ease the practical difficulties of developing APIs and make it more difficult to block adversarial interoperability, government mandates become less necessary.

Conclusion

Large language models, with their ability to process and understand natural language, introduce new possibilities for interoperability. Even if these LLMs tend to centralize computation, their empowerment of individual creators and their facilitation of cooperative, indifferent, and adversarial interoperability will generate an explosion of decentralization — and new avenues for competition.