Stephen Wolfram on LLMs as Interfaces

Natural language is a flexible API

The AI landscape is awash in news. A new Senate AI roadmap to compete with the White House’s unprecedented (and potentially unconstitutional) Executive Order. New AI models introduced, Black Widow attacks OpenAI, 600+ state bills (including California’s terrible SB 1047). In AI policy one tries to engage on the latest issue and make an impact before the next wave of news crests.

Still, in this ocean of news there is one beacon of an idea that keeps drawing my attention: Could LLMs be interfaces between systems? Back in April 2023, in Large Language Models Could Re-Decentralize the Internet, I wrote:

LLMs like ChatGPT can facilitate indifferent and adversarial interoperability by serving as "universal APIs" between different user interfaces. User interfaces are the parts of software that we humans interact with. They employ textual and graphical languages that we can interpret - and such interfaces are part of the content that modern LLMs have been trained on.

LLMs can easily translate such user interfaces into more formal, API-like code. This capability makes it possible to create on-the-fly APIs for any service with a user interface.

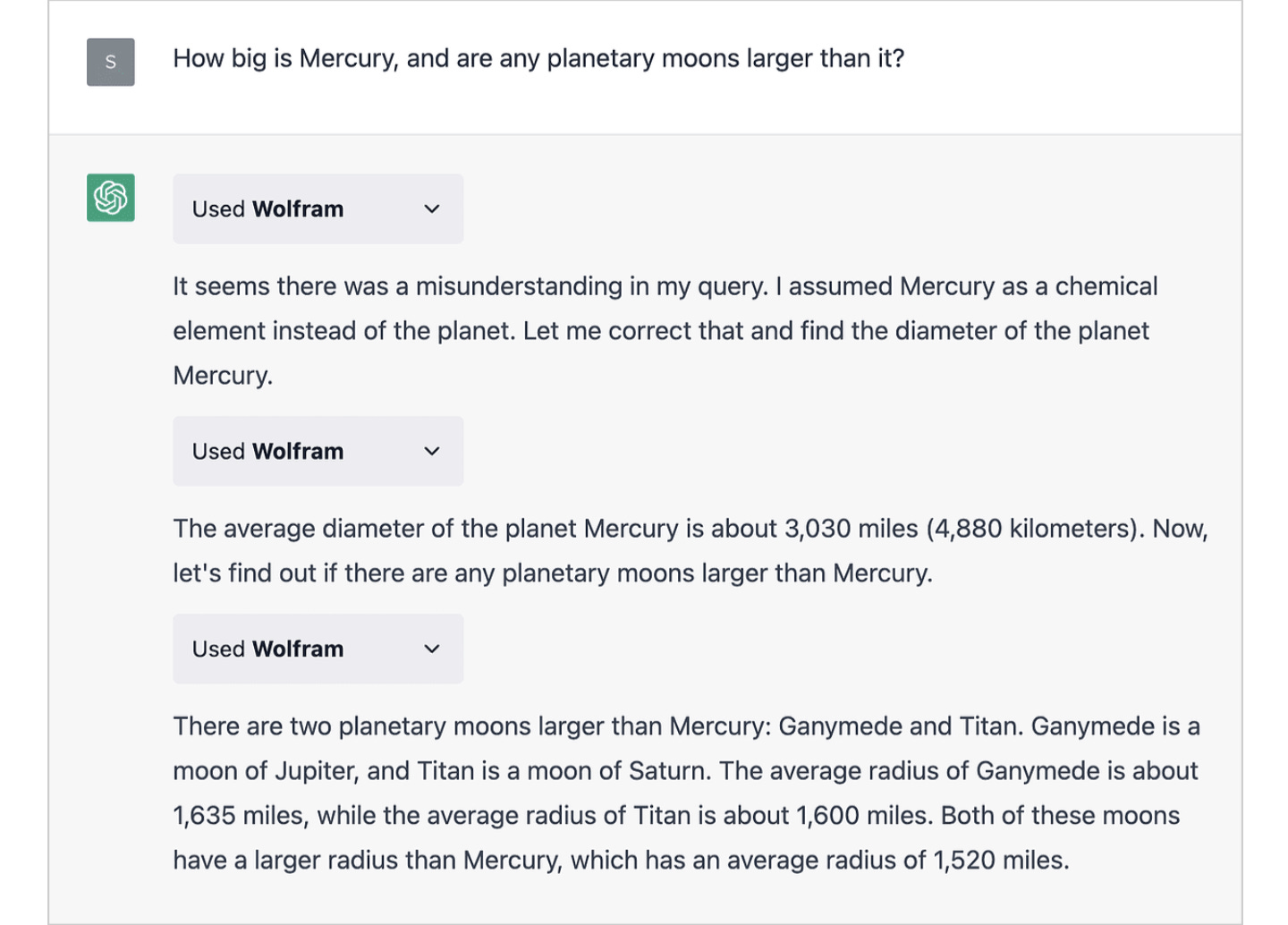

I pointed to ChatGPT’s interactions with scientific search engine Wolfram Alpha in what looked like a natural language interface:

Apparently, brilliant computer scientist Stephen Wolfram, who created Wolfram Alpha, shares my conviction that LLMs can be a universal interface. In a great interview with Reason magazine’s Katherine Mangu-Ward, he notes:

I think the thing to realize about AIs for language is that what they provide is kind of a linguistic user interface. A typical use case might be you are trying to write some report for some regulatory filing. You've got five points you want to make, but you need to file a document.

So you make those five points. You feed it to the LLM. The LLM puffs out this whole document. You send it in. The agency that's reading it has their own LLM, and they're asking their LLM, "Find out the two things we want to know from this big regulatory filing." And it condenses it down to that. So essentially what's happened is you've used natural language as a sort of transport layer that allows you to interface one system to another. (emphasis added)

This kind of translation sounds wasteful. Why not just send the five bullet points, as Katherine asks? It kind of is wasteful, but it is also extremely powerful because connecting different systems is difficult. As Wolfram responds,

[I]t's just convenient that you've got these two systems that are very different trying to talk to each other. Making those things match up is difficult, but if you have this layer of fluffy stuff in the middle, that is our natural language, it's actually easier to get these systems to talk to each other.

My piece focused on using LLMs to translate between different computer systems. Wolfram’s point is broader: we use language to move ideas from one brain to another and to translate from one system (an individual policy advocate) to another (a regulatory agency). More engineered protocols are brittle in the face of variation - if they encounter a new system, it’s unlikely the protocol will work to connect. Natural language can bridge very different systems precisely because it is wasteful, redundant, and messy.

The ChatGPT-to-Wolfram Alpha was an early version of what is possible here. If you haven’t seen it, check out this recent live ChatGPT 4o demo showing two chatbots interfacing with a human and with the other chatbot through spoken language.

A technology capable of doing this should be likewise capable of interacting with typical human-computer interfaces. I haven’t seen anyone roll out a service like this yet (it seems a natural fit for Zapier or IFTTT). My colleague Logan did write about the “Large Action Model” software underlying the Rabbit R1, which sounds similar to what I am imagining:

Rabbit’s LAM is trained to interact with the UI, which theoretically means that the LAM can interact with every application on the internet. In other words, if you ask Rabbit R1 to call an Uber, it will interact with Uber’s website the same way that a human would and because this interaction isn’t being driven by a neural network, the action will be interpretable and traceable.

The R1’s reception has been rocky, but even if they haven’t succeeded, I still think the LLM-as-universal interface is coming.

As such, I’ll re-up the tech, competition, and business model implications I laid out last year. I’m planning to write more on each of these in the near future.

Natural Language as Universal API. APIs between software may become less formalized and more iterative, perhaps communicating primarily through natural language.

Automatically Negotiated APIs. Future software programs might negotiate how to communicate through an iterative and dynamic process starting at a natural language level but quickly evolving a more specific, efficient, built-to-purpose interface. This would mitigate the legacy support problem of cooperative interoperability APIs. If one service changes the interface, this would just start a new conversation to evolve an efficient API. I imagine APIs will co-evolve between two services, similar to how human twins sometimes develop their own languages through continuous communication with each other.

Adversarial Interoperability Becomes Easier. Adversarial interoperability is going to become easier to do and harder to prevent. LLMs will be able to read and interact with the same interface humans use. If a service provider attempts to break the interface for LLM use, he will probably break it for users, too.

Interoperability as a Data Source. Centrally provided LLMs offering this API-like translation services would have a bird’s eye view of the entire ecosystem of platform-to-platform interactions. Such LLMs would be well-positioned to learn general principles on how to better mediate communication between different pieces of software, evolving and become more efficient over time. Thus, even centralization of the LLM would enable enormous modularity and flexibility elsewhere.

No need for Interoperability Mandates. Advocates for interoperability often turn to regulation to overcome the business incentives and coordination costs that can prevent cooperative interoperability. To the extent that LLMs ease the practical difficulties of developing APIs and make it more difficult to block adversarial interoperability, government mandates become less necessary.

Happy Memorial Day weekend! I hope you spend it using natural language to interface with friends and family.