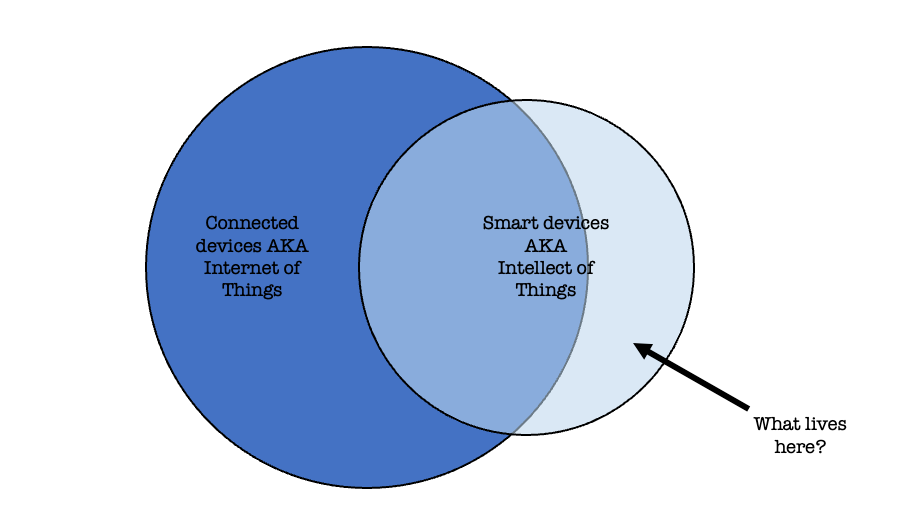

Ethan Mollick’s An AI Haunted World argues that Large Language Models (LLMs) like ChatGPT are going to be everywhere. He describes how open-source models, developed by companies like Mistral, are increasingly accessible and installable on smaller and less powerful devices. The trend is toward a future of ubiquitous AI, where many everyday objects are smart-ish. You remember the “Internet of Things”? Here comes the Intellect of Things:

I can already run the fairly complex Mistral model on my recent model iPhone, but, just a week ago, Microsoft released a model that just as capable, but only a third as taxing on my poor phone. Between increasing hardware speeds (thanks, Moore’s Law) and increasingly optimized models, it is going to be trivial to run decent LLMs on almost everything. Someone already has an AI running on their watch.

Since LLMs are capable of more complex “reasoning” than a simple Siri or Alexa and do not need to be connected to the internet, we will soon be in a world where a surprisingly large number of products have intelligence of a sort. And because it is incredibly cheap to modify an LLM (which already has a lot of underlying training) to understand a new situation, you don’t need a lot of software development effort to make anything “smart.” Your dishwasher can troubleshoot its own issues, your security system can figure out if something suspicious is happening in your house, and your exercise equipment can persuade you to work a little harder.

I have some doubts about how well LLMs will be able to “reason” through troubleshooting situations, given that such models lack a logic model. Still, when I used ChatGPT to troubleshoot my dad’s stalling-out riding lawnmower, it helped us find and fix a somewhat obscure problem. Maybe reasoning is overrated?

By all reports, nearly every vendor at last month’s Consumer Electronics Show had an AI pitch. Still, I don’t think we’ve thought enough about what intelligence everywhere might look like. Most people are extrapolating AI’s future from today’s app-based chatbots.

Mollick’s essay prompts me to imagine a world where every device can explain how to use it, in real time, as you use it. I envision asking my washing machines’s LLM, trained on its own manual and loads (sic) of usage data, whether “deep rinse” or “extra rinse” would be better for dirty kids clothes — and it answers back.

The Power of Ubiquity

The Intellect of Things could unlock substantial productivity in existing products. Many product features go unused due in part to busy users, unclear instructions, and obtuse interface design. A 2019 study by product analytics company Pendo estimated that 80% of software features are rarely or never used. Often, consumers would use those features if they knew about them: a Scientific American article quotes one exasperated Microsoft employee as saying, “[M]ost feature requests the company gets for Microsoft Office are, in fact, already features of Microsoft Office.”

We miss the features on many physical objects and devices. A whole genre of online listicles and video content shows often overlooked functions and features of common household products. My own personal example: I was a driver for more than a decade before I learned that every car’s fuel gage has an arrow pointing to the side of the car with the gas cap. It’s a great time-saver for rental cars!

Better documentation isn’t the answer. The same Scientific American article explains that most people don’t read user manuals, even for expensive physical devices like washing machines or automobiles, and not even when something goes wrong. Instead, people increasingly troubleshoot problems using Google search and YouTube. Those platforms make solutions easier to find and to learn from than a written manual.

LLMs could further streamline the troubleshooting process by offering help from the device itself. Augmenting a base LLM model with all the design, marketing, and consumer support documentation for a product could turn your washing machine or car into a query-able expert on its own operation, ready to help right when and where the problem occurs.

Such LLMs could also help with feature discovery. By monitoring how users interact with the software or device, a LLM could identify patterns of use that could be improved by using other features of the product, and guide the user toward those features. It might even be possible for devices to discover features on other devices in the same environment and collaborate with them.

All of this is only possible if the chips and software get inexpensive enough to embed in common devices. We’re not there yet, but the trend is in the right direction.

The Potential Privacy ?Benefits?

I can practically hear some tech skeptics gasping at the idea of every device talking and listening to you. What about privacy? What about data security?

Many raised these legitimate concerns about the Internet of Things. I spent a lot of time working on the FTC’s report on the Internet of Things. That report has some relevant recommendations for connected and intelliget devices. (See also Commissioner Ohlhausen’s separate statement, which highlights key strengths and weaknesses of the report). Companies should engineer devices and establish policies and processes with these concerns in mind.

But Intellect of Things concerns aren’t identical to Internet of Things concerns. One particular difference is that cheap embedded LLMs wouldn’t necessarily need to be connected to the internet. Unlike current smart home devices, devices with embedded LLMs could offer sophisticated user interactions with entirely local processing. User data need never leave the device. There will be smart devices that are not connected.

I’m not sure how large the “smart, disconnected devices” category will be. I couldn’t find much information on the cost/functionality trends for on-device speech recognition and generation. Voice seems like the obvious way to interact with on-device LLMs. If speech recognition and generation cannot be done efficiently on-device, that could limit privacy-enhancing, entirely on-device interactions.

Even if an AI interface can be provided without connectivity, collecting such data remains valuable to improve the LLM and the underlying product. Will privacy and robustness benefits of running without connectivity outweigh the benefits of being connected?

Probably, in some cases!

But my big takeaway from Mollick’s piece is that I bring preconceived frameworks to this new technology. App-based chatbots are merely the start of LLM uses. We need social, business, and policy frameworks that accommodate many new uses, many of which are difficult to anticipate. Similarly, embedding AI in devices will raise different issues than just connecting devices to the internet.

I want to continue to challenging and test my frameworks and analogies for thinking about AI. Ethan Mollick’s piece did that for me; please share other pieces that have done that for you!